Shaping the Future of Explainable AI!

X-by-Design represents the next frontier in AI innovation, marking a significant outcome of the XMANAI European project. By prioritizing explainability from the outset, our approach empowers AI designers, engineers, and data scientists to construct AI systems that inherently offer transparent insights into their decision-making processes.

Our methodology supports explainability across all stages of AI development, including data preparation, model creation, and result interpretation:

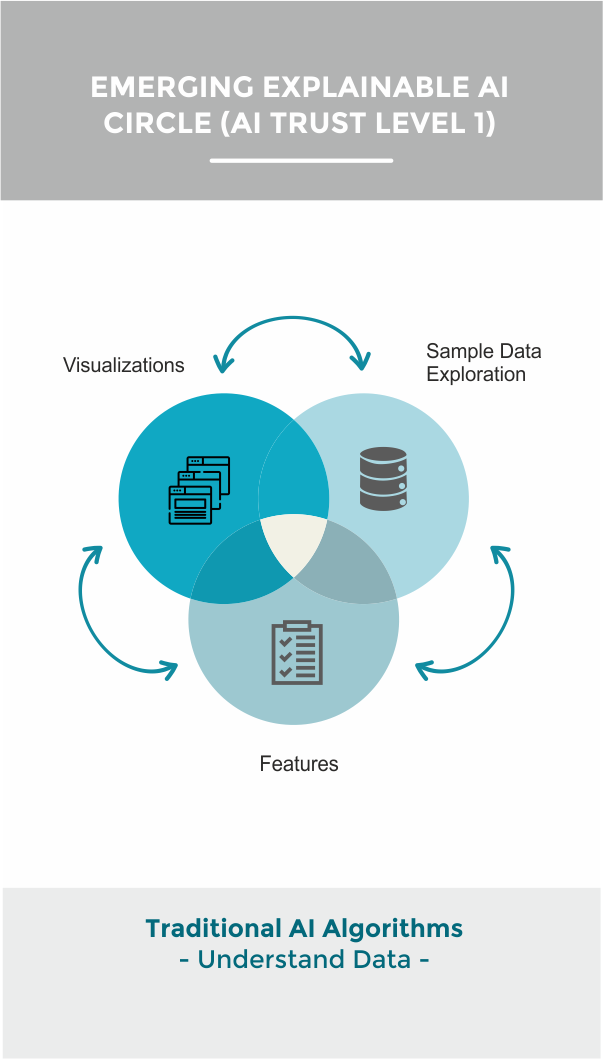

EMERGING EXPLAINABLE AI (XAI) CIRCLE (AI TRUST LEVEL 1)

Traditional AI algorithms in which the focus of data scientists is on understanding the data at hand by exploring the industrial data, experimenting with the extracted features to construct appropriate AI models and visualizing the results. Such visualizations typically act as the primer for communicating results to the business experts in order to inform them on how to take action.

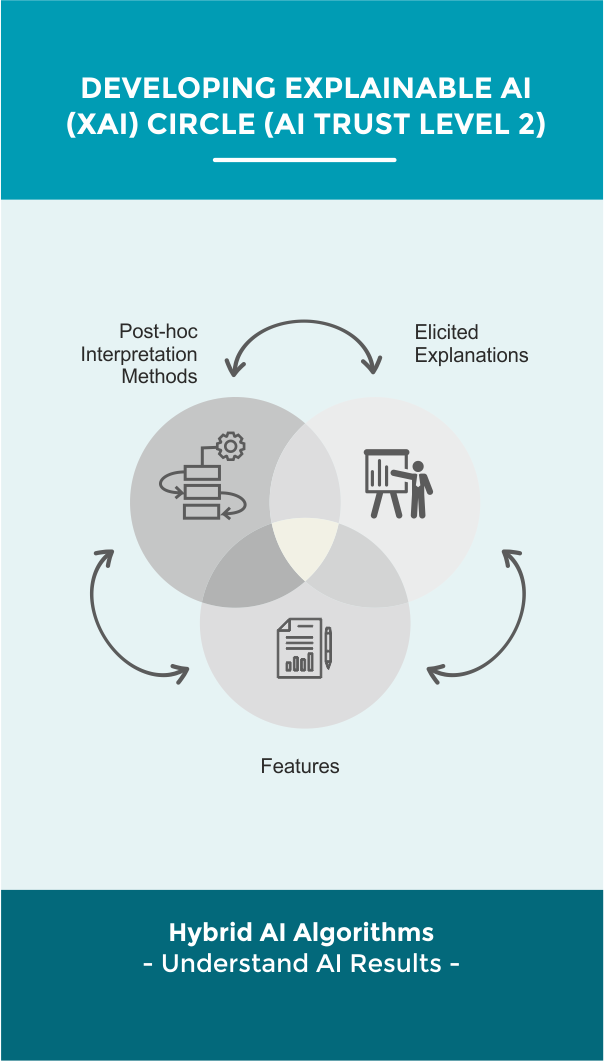

DEVELOPING EXPLAINABLE AI (XAI) CIRCLE (AI TRUST LEVEL 2)

“Hybrid” AI algorithms since the typical basic, machine learning and deep learning algorithms are complemented by post hoc interpretation methods, e.g. surrogate models. Explainability of results for the business experts is also pursued through elicited explanations by the data scientists and by highlighting the features that are necessarily present/absent from a prediction.

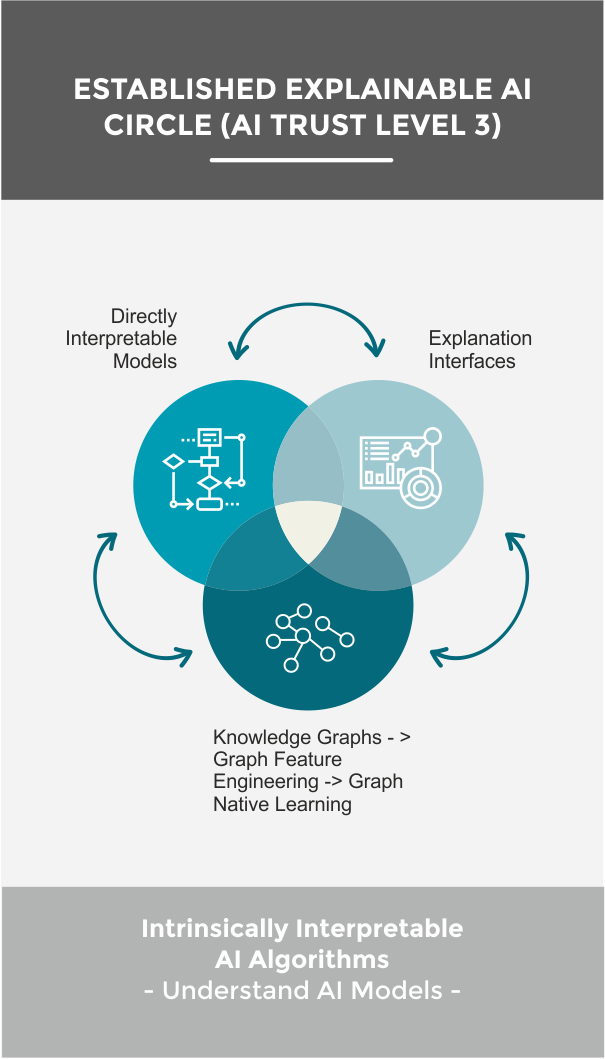

ESTABLISHED EXPLAINABLE AI (XAI) CIRCLE (AI TRUST LEVEL 3)

Intrinsically interpretable AI models including Graph AI models that provide context for decisions with their knowledge graphs, efficiency and credibility by highlighting which individual layers and thresholds have led to a prediction. Business experts on their behalf come across the emerging explanation interfaces that help them relate to how data, predictions and algorithms actually influence their decisions.

Key Features

- Semantic Data Understanding : Deep comprehension of data semantics and structure, facilitated by a common data model.

- Interactive Exploration : Dynamic data exploration and visualization tools to monitor for potential bias or drift.

- Global and Local Interpretability : Understanding AI models on both macro and micro levels, enabling insights into overall model behavior and specific predictions.

- Post-hoc Explanations : Leveraging techniques such as LIME and SHAP to provide intuitive explanations for model decisions.

- Actionable Insights : Transforming results into concrete actions through fit-for-purpose visualizations, textual explanations, and illustrative examples.

Assessment methods

- XAI Models Security : Evaluating risks and vulnerabilities in explainable ML/DL models to ensure robustness and immunity.

- XAI Models Performance : Measuring model metrics and explainability indicators to optimize performance.

- XAI Assets Sharing : Facilitating collaboration through the sharing of XAI assets and resources.

Our X-By-Design Services

X-LEARN

Consultancy services for defining/identifying the best methodologies or models to integrate explainabilityin the design phase of end-users’ projects.

X-APPLY

Analysis of the AI status and most effective path to integrate digital solutions based on explainabilityin industrial contexts by providing IT services.

We are committed to pioneering the future of AI by championing transparency, reliability, and collaboration!

Do you want to discover more about our services?

Why is Explainable AI important?

Attaining such a property in machine learning is often elusive, as models frequently lack inherent explainability. Achieving it demands extensive retraining, a laborious and computationally intensive endeavor.

An explainable design hinges on the ability to extract qualifiers from data, identifying heuristically meaningful features. Additionally, it entails establishing causal relationships between inputs and outputs, thereby enhancing the interpretability of the model’s decision-making process

Increase Human Trust in AI & Transparency and Reliability of AI

- Transparency: Revealing the decision-making process builds trust.

- Understanding: Users comprehend AI decisions, fostering trust.

- Error Detection: Identifying and correcting mistakes bolsters reliability.

- Consistency: Providing consistent explanations ensures predictability.

- Fairness and Accountability: Verification of unbiased decisions reinforces trust.

- User Empowerment: Understanding empowers users, fostering trust.