Within this dedicated section, the best explainable visualisations to understand anomalies are displayed. Anomalies are deviations or irregularities in data patterns that stand out from the expected or normal behavior. The primary objective of this section is to indicate how to analyse irrigularities in order to recognise which features contribute to anomalies.

A textual representation can be used to facilitate AI model explainability by converting complex model outputs into human-readable text. Techniques like natural language processing and attention mechanisms help highlight key features and decision-making processes, providing concise and interpretable explanations. This promotes transparency and trust in AI systems for effective communication with end-users.

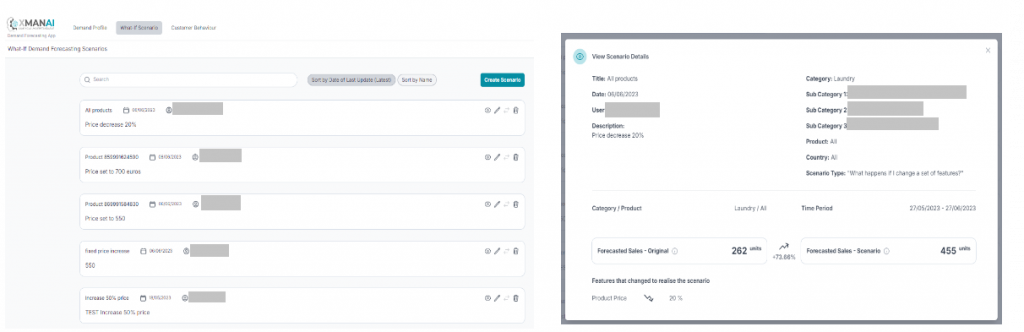

A text-based explaination is used by a XMANAI demonstrator to illustrate how changing the value of a specific feature will change the outcome of the prediction in a what-if scenario forecast. The user can define specific what-if scenario, changing value of some features and the results is visualised with a simple textual example.

XMANAI Project Coordinator

Michele Sesana – TXT

e-mail: michele.sesana@txtgroup.com

XMANAI Scientific Coordinator

Dr. Yury Glikman – Fraunhofer FOKUS

e-mail: yury.glikman@fokus.fraunhofer.de

XMANAI Technical Coordinator

Dr. Fenareti Lampathaki – SUITE5

e-mail: fenareti@suite5.eu

TXT e-solutions S.p.A.

Via Frigia 27 – 20126 Milano

t: +390225771804