What is the XMANAI Minimum Viable Product (MVP)?

In XMANAI, the exploration of the Explainable AI landscape, the analysis of the business requirements from its 4 demonstrators, and the elicitation of the technical requirements have culminated with the definition of the Minimum Viable Product (MVP). In general, the MVP refers to an early release of any product with the minimum set of features and functionalities that can satisfy early adopters who, in turn, can promptly provide feedback for future product improvements. For the XMANAI scope, the MVP represents the overall mindset and strategy adopted for distributing efficiently the development and integration workload, for continuously testing the end-user reaction, and validating the methodological ideas and hypothesis.

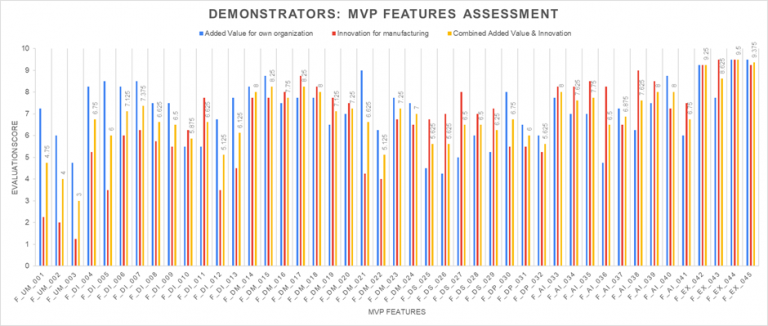

The wide range of technical requirements that were brainstormed by the XMANAI technical partners have been grouped and consolidated into different MVP features, that have been assessed for: (a) their added value (by demonstrator partners) as indicatively depicted in Figure 1, and (b) their feasibility (by technical partners) in order to produce a prioritized list of features and functionalities as part of the draft XMANAI MVP.

Following the MoSCoW approach (that classifies the requirements into must-have, should-have, could-have, and won’t-have, or will not have right now), the set of features that comprise the preliminary XMANAI MVP as of September 2021, focuses on the Explainable AI features as must-have, i.e.:

- XMANAI_F_AI_038 Collaboration over AI pipelines creation (experiments comparison, history of events, simulations of different settings, models, and methods for same task)

- XMANAI_F_EX_042 Explainability Methods Management (add/remove/configure, register/import)

- XMANAI_F_EX_043 Collaboration over AI model/results/pipelines explanations (application of explainability methods at AI pipeline or model level, results querying)

- XMANAI_F_EX_044 Explainability Results Visualisation (various charts, adjust based on user profile)

- XMANAI_F_EXI_045 Explainability Results Evaluation (allow manual feedback & results validation)

- XMANAI_F_DM_023 AI Model Security Assessment

Through the consolidation of the XMANAI MVP, it became evident that explainability needs to be pursued in XMANAI in three axes:

- Understanding Data as the primer towards AI explainability that can be ensured by properly ingesting data, extracting their structure and semantics, and allowing for sample data exploration, summary statistics and visualizations.

- Explaining Results of AI models in a comprehensive, yet interactive way through different explainability techniques in order to bring to the same page both business users and data scientists / engineers.

- Understanding the inner workings of AI models in order to build robust and reliable AI solutions that shall inspire trust to the manufacturers.

In summary, the XMANAI MVP shall guide the research and development activities of the project throughout its lifecycle and will be maintained as a ‘live’ document which will be continuously updated with new research findings and feature requests with added value.