Explainable AI: a key to trust and acceptance of AI-based decision support systems

Artificial intelligence is often based on complex algorithms and mathematical models that are difficult to understand. One of the characteristics of modern AI, based mainly on neural networks, is that it uses ‘black-box’ models, i.e. ‘boxes’ that make decisions without the user knowing what is in them and how the decisions are made. These models are extremely powerful (we can see some uses for them in natural language generation and translation, image recognition, and autonomous driving), but they become risky to adopt in critical situations and pose a problem about the responsibility of those who use them.

In the financial sector, for example, explaining the decisions of artificial intelligence systems is necessary to meet regulatory requirements and to provide analysts with the information they need to review high-risk decisions.

In factory operations, artificial intelligence models that support decision-making are increasingly being adopted by large companies and are beginning to meet the interest of SMEs as well, thanks to recent developments in terms of ease of adoption and cost of implementation; however, many of these models are not easily understood by the people interacting with them, which can make their use more uncertain and limit their deployment.

Trust and acceptance of AI decision-making mechanisms by the operators who employ them are therefore essential and become prerequisites for their large-scale adoption.

Trust and acceptance are achieved by improving the comprehensibility of AI models and enabling users to understand machine decision-making: this has become a major challenge for AI vendors for applications in the corporate world.

Consider, for example, a production line where workers use heavy and potentially dangerous equipment to produce steel pipes. Company executives hire a team of machine learning (ML) professionals to develop an artificial intelligence (AI) model that can assist frontline workers in making safe decisions, with the hope that this model will revolutionize their business by improving efficiency and worker safety. After an expensive development process, manufacturers present their complex, high-precision model to the production line, expecting to see their investment pay off. Instead, worker adoption is extremely limited. What went wrong? Although the model in the example was safe and accurate, the target users did not trust the artificial intelligence system because they did not know how it made decisions. End users deserve to understand the underlying decision-making processes of the systems they have to employ, especially in high-risk situations.

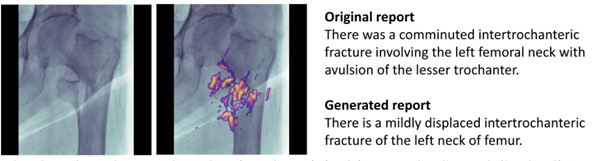

As another example, in another area, suppose we have image recognition software that is able to diagnose diseases from, say, an X-ray: this type of software, based on artificial intelligence (AI), is already widely used in hospitals as a decision support for radiologists. Suppose this software classifies a patient’s X-ray as containing a bone fracture. If the doctor, knowing the software’s diagnosis, did not recognize the fracture, he would be faced with two options: either assume that the software was wrong and disregard the report, taking risks to the patient’s health, or spend more time trying to interpret the software’s diagnosis, perhaps in consultation with colleagues. If, on the other hand, the software, in addition to reporting the image, were able to indicate where the fracture is and what type it is, it would be much easier for the doctor to come to a conclusion.

Explainable AI (XAI) is an emerging research field that aims to solve these problems by helping people understand how AI arrives at its decisions. Explanations can be used to help lay people, such as end users, better understand how AI systems work and clarify questions and doubts about their behaviour; this increased transparency helps build trust and supports system monitoring and verifiability.

The EU-funded XMANAI project has developed a number of industrial pilot cases in the automotive and appliance industries, which demonstrated that Explainability is a key element to stimulating the adoption of AI in various sectors because it provides transparent and understandable information on algorithm decisions and results.