XAI Model Guard: The XMANAI AI Models Security Framework

As manufacturing organizations are embracing the Industry 4.0 initiative that is revolutionizing the manufacturing sector towards the realization of smart factories, the adoption rate of technologies related to Artificial Intelligence (AI), machine learning, and analytics is also growing. Nevertheless, digital transformation has also increased the importance of effective cybersecurity measures as organizations are highly interested in measures that mitigate and even eliminate operational threats and data privacy vulnerabilities.

The rise of AI and its growing adoption by manufacturing organizations has also drawn the attention of adversaries that wish to manipulate them for malevolent purposes. As AI technologies make tremendous advancements and their adoption rate is increasing towards a more agile and flexible production line, their usage has introduced a series of challenges from the manufacturing domain which need to be overcome from the standpoint of cybersecurity. While AI is offers great potential to revolutionize production lines with smart and effective operations, at the same time they introduce several security risks. This is evident from the multiple reported security incidents that the intelligent manufacturing systems that rely on these technologies can be probed and manipulated by bad actors.

Within the context of XMANAI, a security framework has been designed which aims to provide the required functionalities that enable the safeguarding of the security and integrity aspects of the produced AI models in the XMANAI platform. The scope of the security framework is to assess different layers of information with aim of evaluating the potential security risks and their reporting to the asset owner. The security framework is realised with the XAI Model Guard component which constitutes one of the main components of the XMANAI platform architecture and a core component of the XMANAI platform.

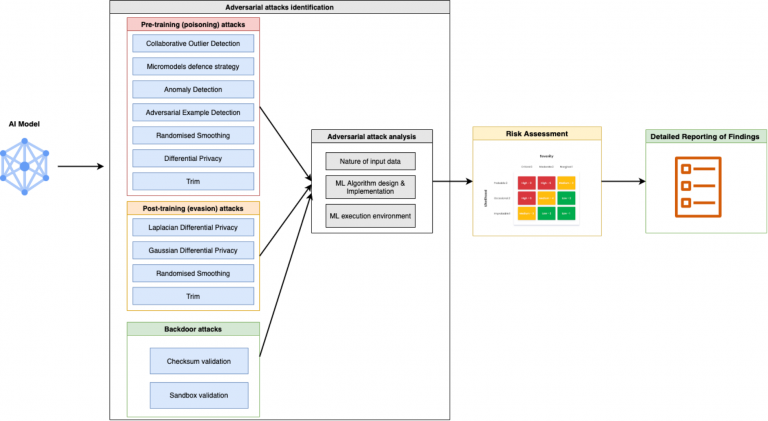

XAI Model Guard performs the adversarial attacks identification by taking into consideration the specifics of each adversarial attack type that includes among others the nature of the input or training data, the design and implementation of the ML algorithm involved in the training process, and the details of the environment of the executed ML process, as well as the knowledge about the performed ML training and execution process by the attacker.

Currently, XAI Model Guard identifies the following adversarial attack types:

- Pre-training (poisoning) attacks: In the specific attack type, the attacker introduces adversarial data points in order to significantly deteriorate the performance of the model. The suggested and implemented by XAI Model Guard (based on an extended literature review) defence mechanisms include: the Collaborative Outlier Detection, the Micromodels, the Anomaly Detection, the Adversarial Example Detection, the Randomised Smoothing, the Differential Privacy and the Trim.

- Post-training (evasion) attacks: In the specific attack type, the attacker performs a modification of the input data so that the AI model cannot identify or deliberately miss-classify specific input. The suggested and implemented by XAI Model Guard (based on an extended literature review) defence mechanisms include: the Laplacian Differential Privacy, the Gaussian Differential Privacy, the Randomised Smoothing and the Trim.

- Backdoor attacks: In the specific attack type, the attacker alters and controls the behavior of the model for specific input. The suggested and implemented by XAI Model Guard (based on an extended literature review) defence mechanisms include the Checksum Validation and the Sandbox Validation.

As a second step and upon the successful identification of the possible adversarial attack type, the XAI Model Guard performs first the assessment of the risks associated with the identified attack type and secondly the detailed reporting of the findings to the asset owner in order to evaluate and take the proper risk mitigation measures based on the findings. In particular, the XAI Model Guard performs an extended analysis of the risks associated with the possible attack taking into consideration all the aspects of the ML trained model environment (including input data, training process, algorithm implementation and execution) in order to inform the asset owner with a detailed report of the risks associated with this particular ML model for each specific adversarial attack type. To this end, the XAI Model Guard by implementing the described security framework provides an additional layer for the security of the XMANAI models that focuses on the integrity and security of the stored ML models. The XAI Model Guard is implemented in the form of blogs which are made available to the users of the XMANAI platform through the intuitive AI pipelines designer of the platform, namely the XAI Pipeline Designer.