How are the final MVP features contributing to the X-By-Design Concept?

Introduction to the Final MVP

As already discussed in a previous post, the XMANAI Minimum Viable Product (MVP) is designed with the minimum set of features and functionalities that can satisfy early adopters who, in turn, can promptly provide feedback for future improvements.

During its final iteration, the XMANAI MVP builds on its previous releases and incorporates the experience gained from the development activities in its implicit technical feasibility assessment while the feedback acquired from the early demonstration activities has also provided additional insights considered for the final feature prioritization. For the final MVP release, the features were re-evaluated to reflect the project’s advancements and instead of setting their planned priority (as in the previous MVP releases), their actual status (in terms of “Will-Have” and “Won’t-Have”) is reflected.

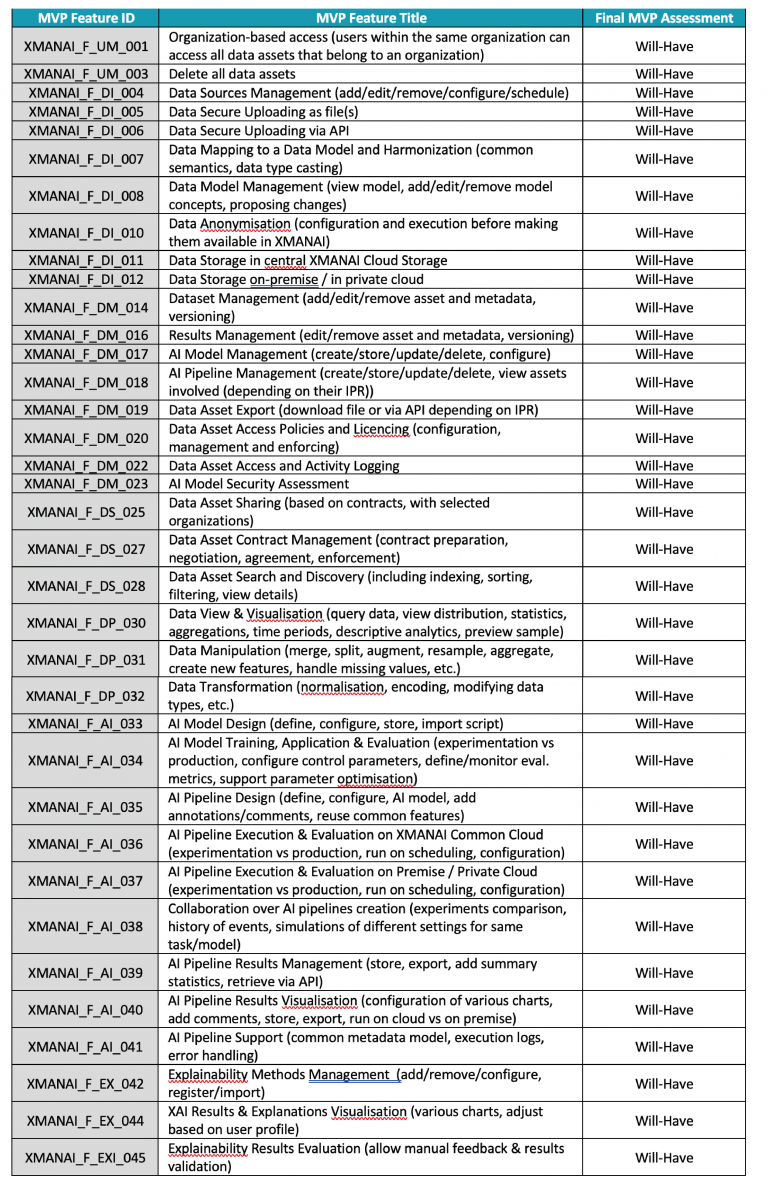

The features of the final MVP are classified under the following categories: Data Integration (DI), Data Management (DM), Data Sharing (DS), Data Preparation (DP), Artificial Intelligence (AI), Explainability (EX), and User Management (UM), as presented below.

X-By-Design Concept from a Technical Perspective

In order to transition towards an Explainable-by-Design paradigm (also address in a previous post) from a technical perspective taking into consideration the final MVP, XMANAI views and supports explainability under three (3) interlinked and collaborative perspectives:

- Data Explainability that focuses on a concrete understanding of data in terms of semantics and structure (data types) per feature in order to gain insights into the input data, achieved through mapping to a common data model. Interactive data exploration and visualization that allow viewing data distribution/profiling charts or summary statistics (e.g. number of missing values, min/max values) can be leveraged in order to monitor potential data drifting or bias issues. XMANAI contributes to this axis through:

- Model Explainability that concerns understanding the different AI (ML/DL) models towards global interpretability (answering how does a model work for all our predictions) and localinterpretability (answering how a model is generating a specific prediction, given specific data points). Different explainability techniques can be employed depending on the model family and type, e.g. black-box or opaque models, in order to attempt to shed light on the model design and training phase. Indicative categories include: (a) Explanations by simplification (or surrogate models) referring to the techniques that approximate an opaque model using a simpler one, which is easier to interpret; (b) Feature relevance explanations which attempt to explain a model’s decision by quantifying the influence of each input variable, serving as a first step towards gaining insights into the model’s reasoning; (c) Explanations through directly interpretable models (since transparent models like decision trees, linear and logistic regression are directly interpretable). Typical techniques associated to the above categories are: Local interpretable model-agnostic explanations (LIME), SHAP (SHapley Additive exPlanation), Anchors (High-Precision Model-Agnostic Explanations), Partial Dependence Plot (PDP), Counterfactual Explanations, CAMEL (Causal Models to Explain Learning).

- Result Explainability that promotes shared understanding of results and translating them into concrete actions in an appropriate style/format. At this step, post-hoc explanations over the results are created (after the model is trained) and may include: (a) Visualizations that typically act as the primer for communicating results to the involved stakeholders in order to inform them about the decision boundary or how features interact with each other; (b) Text explanations that convey in natural language how to take action and can be automatically generated (through natural language generation techniques) or elicited with the involvement of the target audiences; (c) Explanations by example that extract representative instances from the training dataset to demonstrate how the model operates in a similar manner as humans may approach explanations by providing specific examples to describe a broader outcome/process; (d) Counterfactual Explanations that aim to find the model’s decision boundaries with respect to specific input values.

In order to produce AI models and pipelines that are explainable by design, XMANAI has also delivered appropriate assessment methods and techniques to address a number of complementary challenges that currently constitute significant data scientists’ pains, and are classified under:

- XAI Models Security that performs a risk and vulnerability assessment over different Explainable ML/DL models to offer immunity and robustness. The aim is to timely anticipate and safeguard them against unintended bias and adversarial attacks that may try to manipulate their underlying algorithm after learning what input should be fed so as to lead it to a specific output. Such adversarial attacks may refer to poisoning (attempting to maliciously manipulate the training dataset) and evasion attacks, in general.

- XAI Models Performance, referring to: (a) the technical metrics over the performance of an Explainable ML/DL model for which baseline thresholds need to be set in order to be tracked in parallel with transparency and security. Typical performance metrics are accuracy, F1, sensitivity, scalability; (b) the pure explainability aspects (e.g. explanations usefulness, satisfaction, fidelity, completeness, ambiguity, lack of bias) to gauge how appropriate (in terms of content and delivery method) the generated explanations have been as evaluated by the target audiences that consumed the explanations (rather than the experts who created the corresponding XAI models).

- XAI Assets Sharing that allows interaction among different types of stakeholders who share their assets and collaboratively work to build a solution for a specific manufacturing case/problem that reaches consensus and is broadly accepted.