The Draft Catalogue of XMANAI XAI Models

The first round of XMANAI Hybrid XAI models are about to come into action, within the alpha release of the XMANAI platform!

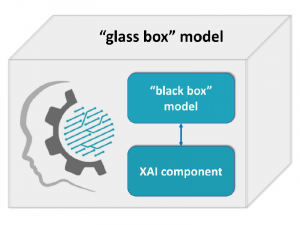

A draft selection of Hybrid XAI models, able to address concrete manufacturing use cases, will populate the XMANAI Models Catalogue as an integral part of the XMANAI platform. Following the Explainable AI paradigm that lies in the core of the project, “black box” models are transformed into “glass box” solutions via coupling to additional explainability layers. The “interpretability versus performance” trade-off is therefore overridden: the quest for interpretable solutions does not imply sacrificing performance by constraining the search space to simple algorithms, since the behavior and predictions of complex AI models can as well be explained under the XMANAI approach.

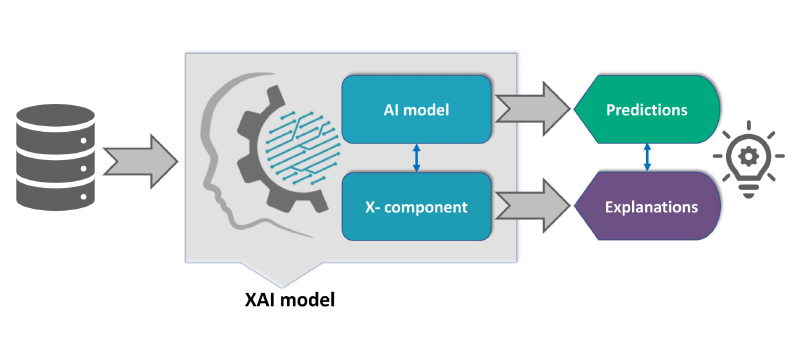

The XMANAI XAI models in the Draft Catalogue are selected as appropriate to address tasks involved in the realistic use case scenarios put forth by the XMANAI demonstrators, including production planning, maintenance scheduling, product quality optimization, product demand forecasting and optimization of processes. The requirements for accuracy and interpretability of results are assigned to different components, so that each component of the XAI model is dedicated to one of two objectives:

- the AI component (“black box” model) is responsible to optimize performance in each case, while

- the adjacent Explainability layer (X- component) is responsible for delivering meaningful explanations to the end user, interpreting the AI model’s behavior and predictions.

Although primarily focused to satisfy the demonstrators’ needs, the XMANAI XAI models are also anticipated to generalize well to solving other tasks within the Manufacturing Landscape. Aiming to support different user journeys, the XMANAI Hybrid XAI models will be available in two states, accessible through corresponding XAI pipelines:

- Baseline models, to be trained from scratch by the user

- Trained models, ready to be directly applied or fine-tuned by the user on new data

Models are trained on data supplied by the XMANAI demonstrators and validated under the experimental setting implied by the XMANAI validation protocol, that was collectively established by the members of the consortium. The end user will be enabled to visualize the metadata of XAI models and select an appropriate model configuration and explainability technique according to their needs, while the respective training or inference sessions will be executed within the private and secure environment provided by the XMANAI ecosystem. The initial catalogue will continuously evolve along with the XMANAI project into its 2nd and 3rd round of trained XAI models, by further training, validating and deploying the models within the experimental settings of the XMANAI demonstrators.

Through an iterative process, XMANAI scientists together with business users will explore different paths to the provision of rich, user-centered explanations along with performant solutions in these industrial settings. The goal is to uncover and serve the appropriate set of explanations that are more easily perceived by the end user in each manufacturing use case scenario. The adopted collaborative approach is anticipated to maximize the impact of the XMANAI project, optimizing business added value while at the same time increasing user trust.