XMANAI´s Evaluation Framework

XMANAI has just finalized its Evaluation Framework to measure the impact that the XMANAI platform will have on the demonstrators!

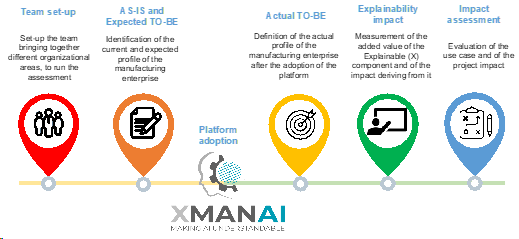

The assessment will be completed at the end of the project to evaluate the platform from a technical and business perspective, measuring how the production and decision-making processes evolved.

The questionnaire is based on two main blocks.

THE 6Ps ASSESSMENT

The 6Ps assessment provides a complete analysis of the main dimensions that may be impacted by Digital Transformation. It is based on the assumption that it is important to boost not only the technical dimensions but also the so-called “socio-business” pillars.

Along the six dimensions, demonstrators will assess three different profiles: their current profile, the expected profile, and the actual profile at the end of the project. The comparison allows to measure the transformation and to understand if the expectations have been fulfilled.

The six dimensions of analysis (from which, the name “6Ps”) are Product, Process, Platform, People, Partnership, and Performance. For each of them, a number of sub-dimensions are evaluated.

The People dimension is fundamental to measure the transformation driven by the adoption of the Explainability component, which impacts workers mostly. Four key aspects are analyzed; they answer to questions:

- TEAMING: How do humans and AI work together to perform tasks? What type of interaction between humans and AI? What before XMANAI?

- AI INTEGRATION: What is the maturity of the process of integration of AI within production? What before XMANAI?

- EXPLAINABILITY: What is the level of transparency of the AI models implemented so that human users will be able to understand and trust decisions? What before XMANAI?

- AI DEVELOPMENT: How does the organization develop AI tools? Internally or with the support of technology providers? What before XMANAI?

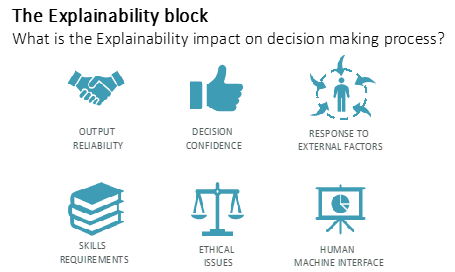

THE EXPLAINABILITY BLOCK

The “Explainability” block measures the impact of Explainable AI on the decision-making process.

The company is invited to reflect on the advantages of adopting an XAI solution, instead of simply an AI solution; to reason about how much better the X component makes understand the output of a model, and also if there are additional activities that the company may do to properly adopt it (e.g. training for workers, new hiring, …).

The section analyses five aspects related to Explainable AI; they answer to questions:

- OUTPUT RELIABILITY: Is the output of the AI model considered more reliable thanks to the Explainability component?

- DECISION CONFIDENCE: Do you feel more confident in your decisions thanks to the Explainability component?

- RESPONSE TO EXTERNAL FACTORS: Is the way of making decisions under pressure changed thanks to the Explainability component?

- SKILLS REQUIREMENTS: Is any additional skill required in your company to manage the Explainability component?

- ETHICAL ISSUES: Do you think that the Explainability component will prevent any possible ethical issues?

- HUMAN MACHINE INTERFACE: How much “visualizing” information is effective as Explainability component?